QUESTION:

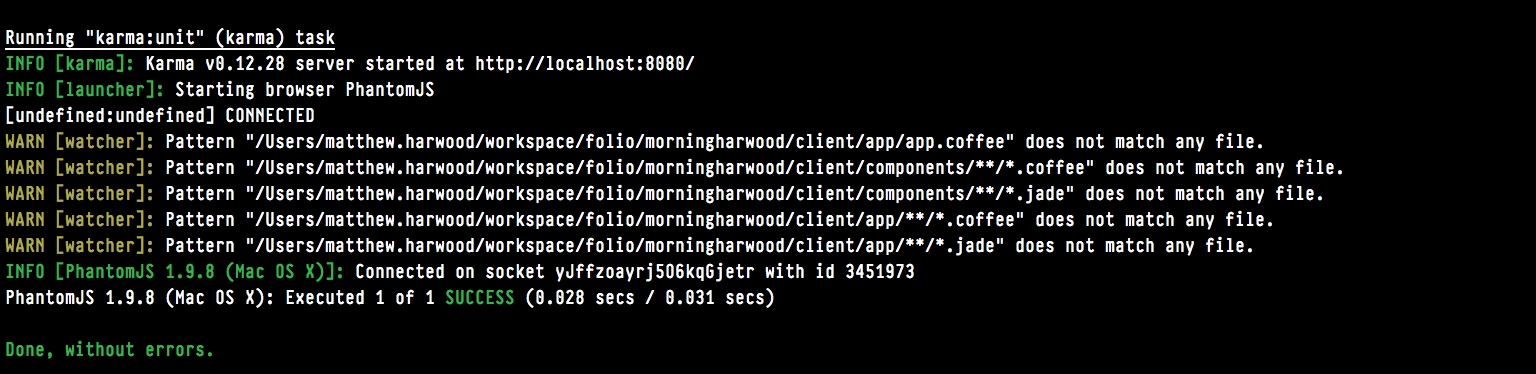

- Why are my tests failing when ui-router-extras (not normal ui-router) is install?

- How can I use ui-router-extras and still have my tests pass?

If you want to install this quickly use yeoman + angular-fullstack-generator + bower install ui-router-extras

I found a similar issue with normal ui-router.

- Luckially, ui-router normal works just fine with my testing.

- After installing ui-router-extras I get an ERROR

If I uninstall ui-router.extras it this test passes just fine:

Heres my test:

'use strict';

describe('Controller: MainCtrl', function () {

// load the controller's module

beforeEach(module('morningharwoodApp'));

beforeEach(module('socketMock'));

var MainCtrl,

scope,

$httpBackend;

// Initialize the controller and a mock scope

beforeEach(inject(function (_$httpBackend_, $controller, $rootScope) {

$httpBackend = _$httpBackend_;

$httpBackend.expectGET('/api/things')

.respond(['HTML5 Boilerplate', 'AngularJS', 'Karma', 'Express']);

scope = $rootScope.$new();

MainCtrl = $controller('MainCtrl', {

$scope: scope

});

}));

it('should attach a list of things to the scope', function () {

$httpBackend.flush();

expect(scope.someThings.length).toBe(4);

});

});

Here's my karma.conf

module.exports = function(config) {

config.set({

// base path, that will be used to resolve files and exclude

basePath: '',

// testing framework to use (jasmine/mocha/qunit/...)

frameworks: ['jasmine'],

// list of files / patterns to load in the browser

files: [

'client/bower_components/jquery/dist/jquery.js',

'client/bower_components/angular/angular.js',

'client/bower_components/angular-mocks/angular-mocks.js',

'client/bower_components/angular-resource/angular-resource.js',

'client/bower_components/angular-cookies/angular-cookies.js',

'client/bower_components/angular-sanitize/angular-sanitize.js',

'client/bower_components/lodash/dist/lodash.compat.js',

'client/bower_components/angular-socket-io/socket.js',

'client/bower_components/angular-ui-router/release/angular-ui-router.js',

'client/bower_components/famous-polyfills/classList.js',

'client/bower_components/famous-polyfills/functionPrototypeBind.js',

'client/bower_components/famous-polyfills/requestAnimationFrame.js',

'client/bower_components/famous/dist/famous-global.js',

'client/bower_components/famous-angular/dist/famous-angular.js',

'client/app/app.js',

'client/app/app.coffee',

'client/app/**/*.js',

'client/app/**/*.coffee',

'client/components/**/*.js',

'client/components/**/*.coffee',

'client/app/**/*.jade',

'client/components/**/*.jade',

'client/app/**/*.html',

'client/components/**/*.html'

],

preprocessors: {

'**/*.jade': 'ng-jade2js',

'**/*.html': 'html2js',

'**/*.coffee': 'coffee',

},

ngHtml2JsPreprocessor: {

stripPrefix: 'client/'

},

ngJade2JsPreprocessor: {

stripPrefix: 'client/'

},

// list of files / patterns to exclude

exclude: [],

// web server port

port: 8080,

// level of logging

// possible values: LOG_DISABLE || LOG_ERROR || LOG_WARN || LOG_INFO || LOG_DEBUG

logLevel: config.LOG_INFO,

// enable / disable watching file and executing tests whenever any file changes

autoWatch: false,

// Start these browsers, currently available:

// - Chrome

// - ChromeCanary

// - Firefox

// - Opera

// - Safari (only Mac)

// - PhantomJS

// - IE (only Windows)

browsers: ['PhantomJS'],

// Continuous Integration mode

// if true, it capture browsers, run tests and exit

singleRun: false

});

};